Primate Labs just announced Geekbench AI 1.0, a significant upgrade from what was previously called Geekbench ML. This benchmarking suite is designed to provide in-depth evaluation of CPU, GPU, and NPU performance across all major platforms, including Android, iOS, and desktop.

It benchmarks real-world AI workloads at an incredibly high degree of accuracy, thus provide developers, engineers, and tech-savvy people with a tool to evaluate and optimize device performance. Whether tuning apps or benchmarking hardware, Geekbench AI 1.0 will be one of the basic tools in the benchmarking landscape for AIs.

Geekbench AI builds upon the foundations laid down by earlier preview releases to provide a robust benchmark suite for machine learning, deep learning, and AI-centric workloads. This tool maintains Geekbench’s cross-platform compatibility and real-world workload reflection, proving invaluable to software developers, hardware engineers, and performance enthusiasts alike.

A strategic name change

It was previously known as “Geekbench ML,” but now has been changed to the more modern “Geekbench AI.” This goes in line with the industry, which is settling for the term “AI” when referring to this class of workloads. This change will ensure clarity in what the benchmarking is all about to users, whether engineers or enthusiasts in their usage.

The complexity of performance measurement

Measuring the performance of AI is hard—not running the tests, but choosing the right tests that reflect the desired performance. This is hard, especially across different platforms. Primate Labs addresses this complexity by designing tests that mirror real-world use cases developers encounter, informed by ongoing discussions with software and hardware engineers throughout the industry.

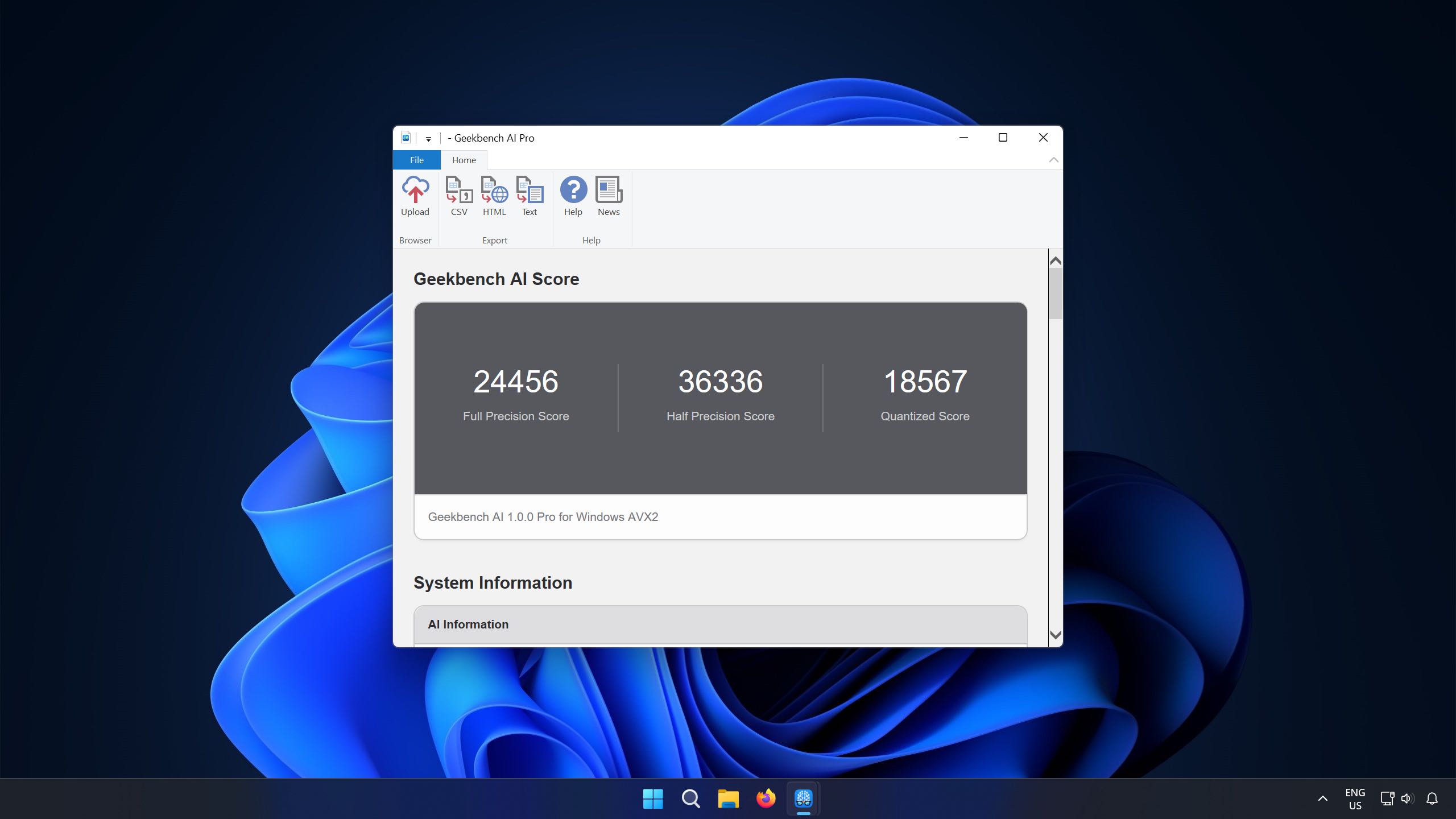

Three scores for a multifaceted landscape

Knowing that AI hardware design comes in different forms and that the developers use it differently, Geekbench AI offers three overall performance scores. This multidimensional approach gives further insight into a device’s ability regarding AI, accounting for the differences in precision levels, tasks, and hardware configurations.

Speed and accuracy combined

While Geekbench AI will still measure how fast a system can run the workload, it also introduces a component to the score that includes how accurate these workloads are. For example, it might run fast, but if the model’s quality for object detection is terrible, then that speed does not count for much. This new benchmark incorporates accuracy into its performance scores, hence making it all-round in assessment and helping developers to find a balance between efficiency and precision.

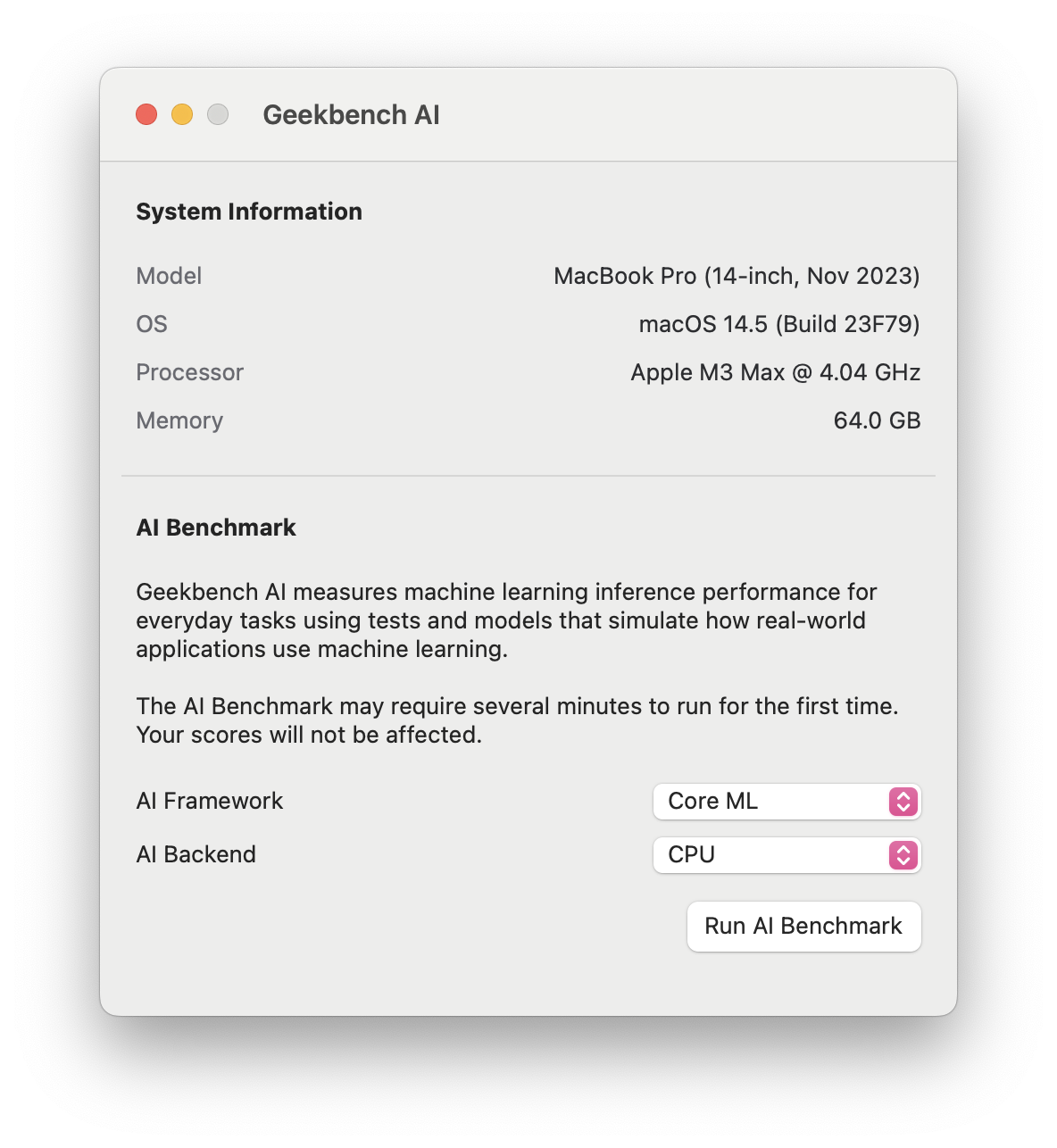

Expanded framework support and realistic data

Geekbench AI 1.0 supports additional frameworks like OpenVINO and TensorFlow Lite delegates, among others, making it compatible with the latest tools that many developers use. It has also integrated deeper datasets more representative of real-world inputs into the benchmark, so users get more relevant and accurate results.

Geekbench AI is now at a level of reliability that developers can integrate it into their workflow with confidence. That said, since the landscape of AI remains one of change, Primate Labs continues its commitment to update Geekbench AI to accommodate new developments in AI. The developer and engineering communities have the opportunity to provide feedback to enhance and fine-tune the tool.

Availability

Geekbench AI 1.0 is now available for general use, with downloads available on Windows, macOS, and Linux. Besides that, you will have it on both the Google Play Store and the Apple App Store, making it available on major platforms.